The promise of Artificial Intelligence is undeniable: trillions of dollars in economic value, unprecedented efficiency, and a new era of competitive advantage. Yet, for many organizations, the reality of AI adoption is far more sobering. Reports from leading institutions like MIT and McKinsey consistently point to a staggering failure rate, with some estimates suggesting that **up to 95% of enterprise AI pilots fail to scale** or deliver meaningful return on investment [1].

This widespread disappointment is not a failure of the technology, but a failure of strategy. The core issue is that most businesses treat AI as a technical problem to be solved by data scientists, rather than a strategic transformation to be led by the C-suite. As a result, they fall victim to predictable and preventable **AI Strategy Failures**.

This comprehensive guide dissects the eight most common pitfalls that derail AI initiatives, from the initial planning stages to post-deployment scaling. More importantly, it provides a structured, three-pillar roadmap—**Problem-First, Data-First, People-First**—for overcoming these challenges, ensuring your organization moves from the struggling majority to the successful minority.

Table of Contents

The Foundational Flaw: Strategic Misalignment

The most critical of all **AI Strategy Failures** occurs before the first line of code is written. It is the fundamental misalignment between the AI project and the core business objectives.

Failure 1: The Tech-First, Value-Second Approach

Many projects are born from a desire to use the latest technology—a “solution looking for a problem.” This “tech-first” mindset leads to sophisticated models that solve interesting technical challenges but fail to move the needle on key business metrics. Successful AI, conversely, is driven by a **value-first** approach, focusing on high-impact use cases that directly address a strategic pain point or unlock a new revenue stream [2].

“Industry stakeholders often misunderstand—or miscommunicate—what problem needs to be solved using AI… The first step to success is defining a clear business problem that is both valuable and feasible to solve with AI.” – RAND Corporation

**The Fix: Lead with the Business Problem.** The AI vision must be defined in collaboration with the C-suite, not in isolation by the IT department. Every project must be tied to a measurable outcome, whether it’s reducing churn, optimizing inventory, or accelerating drug discovery. For a deep dive on how to structure this process, refer to our guide on the 7 Proven Steps to Create an AI Business Strategy.

Failure 2: Lack of C-Suite Sponsorship and Strategic Buy-in

AI transformation requires significant investment, organizational change, and cross-functional cooperation. Without a champion at the highest level—the CEO or a dedicated C-suite leader—projects often stall due to resource conflicts, internal resistance, or a failure to integrate the new capability into existing workflows. This lack of executive buy-in is a primary reason why pilots fail to scale [3].

**The Fix: Secure a Strategic Mandate.** Executive sponsorship ensures that the AI strategy is aligned with the corporate strategy and that necessary resources (budget, talent, data access) are allocated. It also sends a clear message to the organization that AI is a strategic imperative, not an optional experiment.

The Data Deficit: Why Models Starve in Production

AI models are only as good as the data they are trained on. A major cluster of **AI Strategy Failures** stems from a fundamental underestimation of the data challenge.

Failure 3: The “Garbage In, Garbage Out” Problem

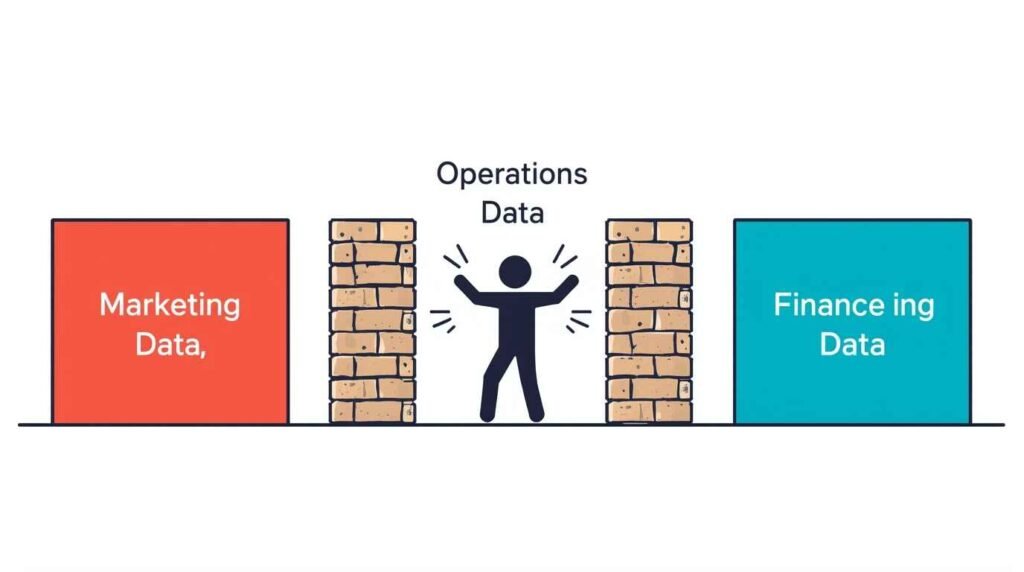

The paradoxical reality is that many companies have massive amounts of data, yet very little of it is clean, labeled, or ready for AI use. Data is often fragmented across silos, inconsistent in format, or riddled with errors and bias. Building a model on poor data guarantees a poor outcome, regardless of the model’s sophistication.

Failure 4: Neglecting Data Governance and Infrastructure

Many organizations rush to model-building without first investing in the foundational data infrastructure, governance, and quality controls. This leads to a situation where a successful pilot cannot be replicated because the data pipeline used in the lab is not scalable, secure, or compliant in a production environment [4].

**The Fix: Adopt a Data-First Approach.** Before any model is built, a significant portion of the budget and time must be dedicated to data cleansing, labeling, and establishing robust data governance. This includes defining data ownership, quality standards, and ethical use policies. Investing in a solid AI governance framework is not an overhead cost; it is a prerequisite for successful scaling.

Table 1: The Core Causes and Solutions for AI Strategy Failures

| Failure Category | Common Pitfall | Strategic Solution |

|---|---|---|

| Strategy | Tech-First Mentality (Solution looking for a problem) | Value-First Approach (Lead with a measurable business problem) |

| Data | Poor Data Quality & Fragmentation (Garbage In, Garbage Out) | Data Governance & Infrastructure Investment (Data-First) |

| People | Siloed Teams & Cultural Resistance (Ignoring end-users) | Cross-Functional Collaboration & Change Management (People-First) |

| Operations | Failure to Scale (Neglecting MLOps and monitoring) | Continuous Monitoring & MLOps (Treat AI as a product) |

The People Problem: Culture, Skills, and Adoption Gaps

AI is a team sport. Even the most technically perfect model will fail if the people who are supposed to use it don’t trust it, don’t know how to integrate it into their workflow, or actively resist it. This is the “people problem,” a major contributor to **AI Strategy Failures**.

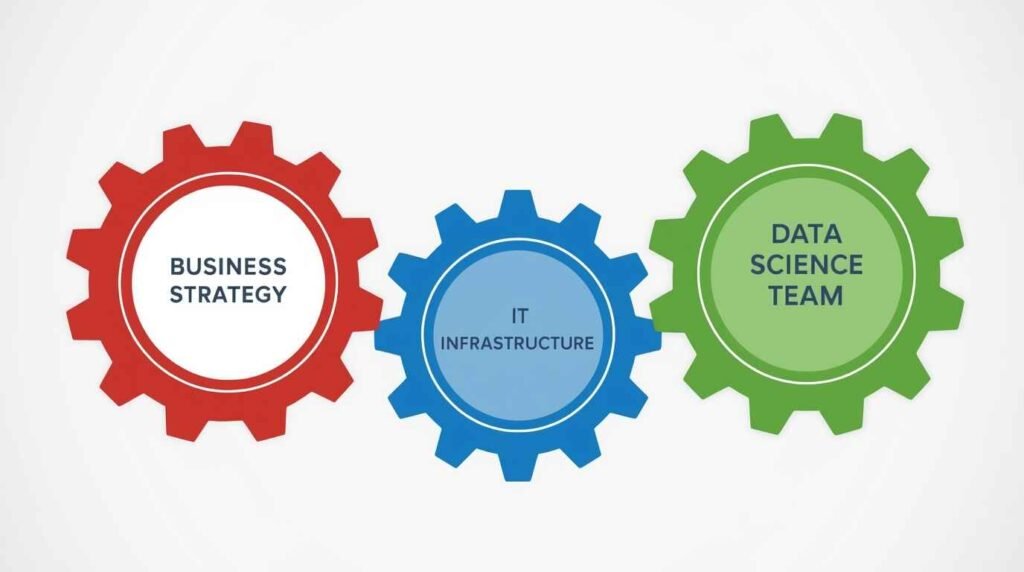

Failure 5: Lack of Cross-Functional Expertise

AI projects often require three distinct skill sets: **Data Science** (building the model), **Engineering** (deploying and maintaining the model), and **Domain Expertise** (understanding the business problem). When these teams operate in silos, the resulting model is either technically brilliant but irrelevant to the business, or perfectly aligned with the business but impossible to scale.

Failure 6: Ignoring the End-User and Adoption

A common mistake is to assume that if a model works, people will use it. In reality, end-users (e.g., sales reps, doctors, factory workers) are often hesitant to adopt AI tools that disrupt their established routines, especially if they don’t understand how the tool works or if it introduces new risks. Failure to manage this organizational change is a direct path to an unused, failed project [5].

**The Fix: Embrace a People-First Strategy.** Successful companies build cross-functional teams from the outset, ensuring domain experts are embedded with data scientists. They also invest heavily in change management, training, and fostering an AI-ready culture that encourages experimentation and trust. This is the essence of building an AI-enabled organization, where humans and machines work seamlessly together.

Scaling Stumbles: Operational and Ethical Hurdles

The final set of **AI Strategy Failures** occurs when a successful pilot attempts to transition to enterprise-wide production, encountering operational and ethical roadblocks.

Failure 7: Neglecting MLOps and Model Drift

Unlike traditional software, AI models degrade over time. As real-world data patterns change (e.g., new customer behavior, economic shifts), the model’s accuracy declines—a phenomenon known as model drift. Without a robust MLOps (Machine Learning Operations) framework to continuously monitor, retrain, and redeploy models, the ROI of the initial investment rapidly decays [6].

Failure 8: Ignoring Ethical, Regulatory, and Security Risks

The ethical dimension of AI—bias, fairness, transparency—is no longer a theoretical concern; it is a regulatory and business risk. Projects that fail to proactively address these issues can be derailed by internal compliance teams, external regulators, or public backlash. Furthermore, security vulnerabilities in AI systems (e.g., adversarial attacks) can lead to catastrophic failures.

**The Fix: Treat AI as a Product, Not a Project.** MLOps ensures that AI is managed with the same rigor as mission-critical software, including automated monitoring and maintenance. Simultaneously, a dedicated AI ethics and risk team must be integrated into the development lifecycle to ensure compliance and build public trust. This focus on long-term value and risk management is key to successful AI value realization.

Overcoming AI Strategy Failures: A Unified Roadmap

Overcoming the high rate of **AI Strategy Failures** requires a unified, disciplined approach that addresses the problem at its root. The solution is not a single tool or technique, but a commitment to three strategic pillars:

- **Problem-First:** Always start with a high-value, measurable business problem, not the technology.

- **Data-First:** Prioritize data quality, governance, and infrastructure as the foundation for all AI initiatives.

- **People-First:** Invest in cross-functional teams, change management, and a culture of trust and adoption.

By adopting this roadmap, organizations can transform their AI initiatives from isolated experiments into a scalable, strategic capability that delivers sustained competitive advantage. If you are looking to implement this kind of disciplined approach, consider seeking expert guidance to ensure your strategy is robust from day one. This is the core focus of effective AI Strategy Consulting.

Conclusion

The high failure rate of AI projects is a clear indicator that the industry has matured beyond the “proof of concept” phase. The next era of AI success will belong to organizations that embrace strategic discipline. By proactively identifying and addressing these common **AI Strategy Failures**—from misaligned goals to data deficiencies and cultural resistance—businesses can finally unlock the full, transformative potential of Artificial Intelligence and secure their place among the successful few.

References

- Fortune. (2025, August 18). MIT report: 95% of generative AI pilots at companies are failing.

- RAND Corporation. (2024, August 13). Why AI Projects Fail and How They Can Succeed.

- McKinsey & Company. (2025, March 12). The State of AI: Global survey.

- LexisNexis. (2025, September 15). Why AI projects fail: 8 common obstacles to success.

- Aptean. (2025, August 13). Top 6 AI Adoption Challenges and How To Overcome Them.

- HBR. (2025, October 21). Why Agentic AI Projects Fail—and How to Set Yours Up for Success.